Team "FingerSecret"

Creating social experience with finger gestures.

Prototype candidate 1: Collaborative Music Improvisation

Description

Patients who live in patients unit feel lonely and lack communication with others. But due to physical limitation or their symptom, they have little chance to move around nor mingle with others. As music is the universal language of human beings and the art format which can immediately influence our emotion, we create this collaborative music improvisation to provide a new way for patients to communicate and make friends with other patients.

Improvising music by using simple gestures allows the patients to express their feeling and listen to others'in a comfortable way. Touch-less gestures make it possible for patients who are physically limited to play this game; they even don't need to move their arms and can play in bed as well.

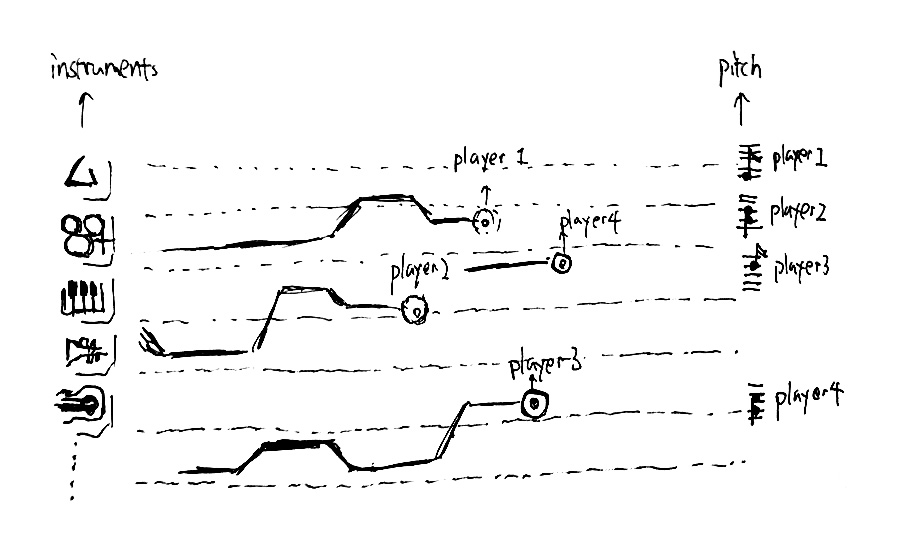

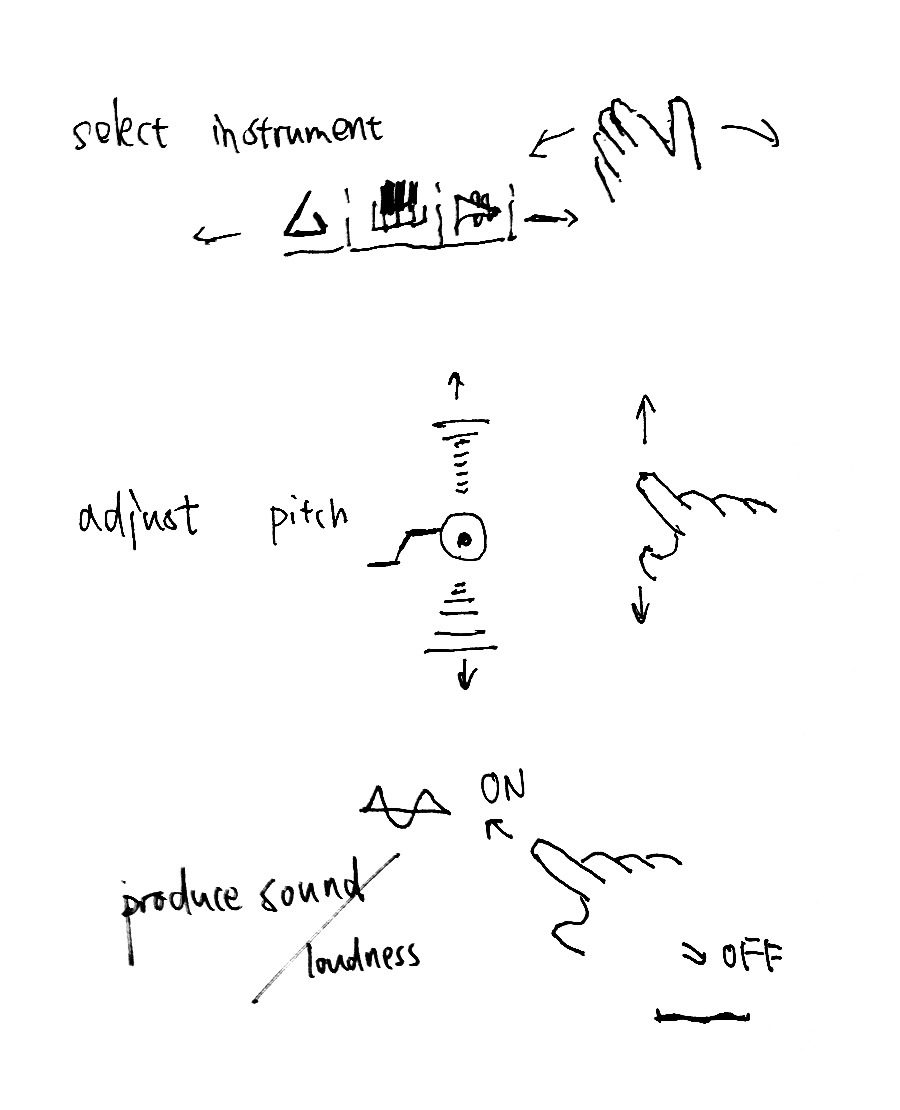

While music composition has been considered difficult for average people without stringent education, in this application, we use gesture, visualization and gamification to simplify this process. We apply the in-air gestures sensed by Leap Motion to control music generation. Specifically, the gestures are designed as following:

- Poking: start improvising

- Withdraw finger: stop making sound

- Vertical finger movement: control pitch ( a set of pre-determined chords are linked to each altitude region)

- Horizontal finger movement: control volume

- Swipe gesture: change instrument.

- Fist: end improvising

System Feasibility

Leap Motion provides an accurate source of the 3D position of fingers. We sense the positions of one or more fingers and then extract their x, y, z values independently. Although the poking gesture may also lead to changes in x and y axes, which can be easily filtered out by a threshold. Intentional changes in x and y axes can be detected relatively easier since they are much bigger than the noise generated by poking.

Interface Sketches and Interaction mock ups: