Team "FingerSecret"

Creating social experience with finger gestures.

Wizard-of-Oz test design for Collaborative Music Improvisation

Sound Wizard

We build a simple site ( http://54.235.75.109 ) for Sound Wizard to control the sound . Sound Wizard is controlling according to the visualizer of Leap, rather they recognize user's gesture directly by eyes, because we want to have an general idea about how well the output of Leap is. Visual Wizard: control UI mock-up and visualization

Prototype 1

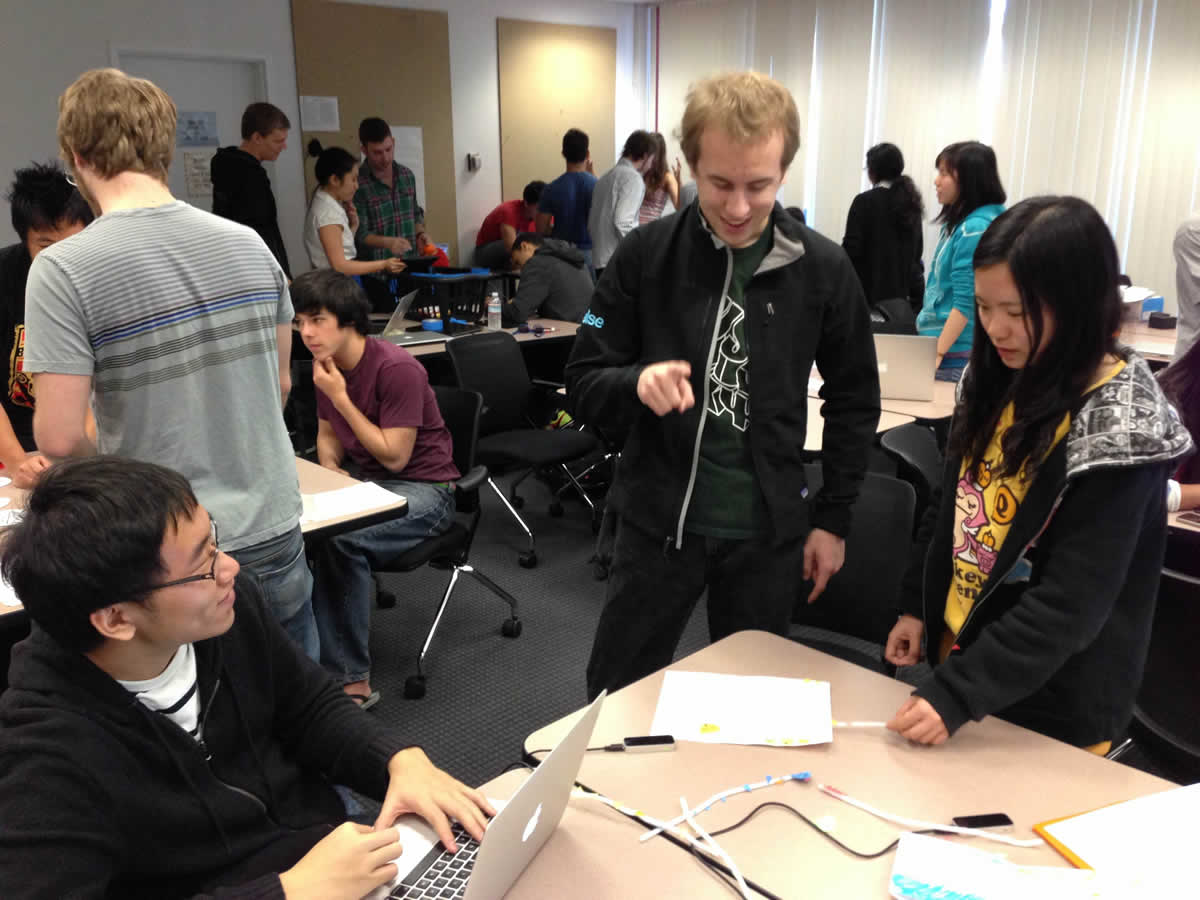

In Thursday's studio, we did test for our first prototype on 4 users in class. The task we're testing is to improvise music and we want to know whether the gestures we designed are intuitive or natural, and how user react with the improvised sound.

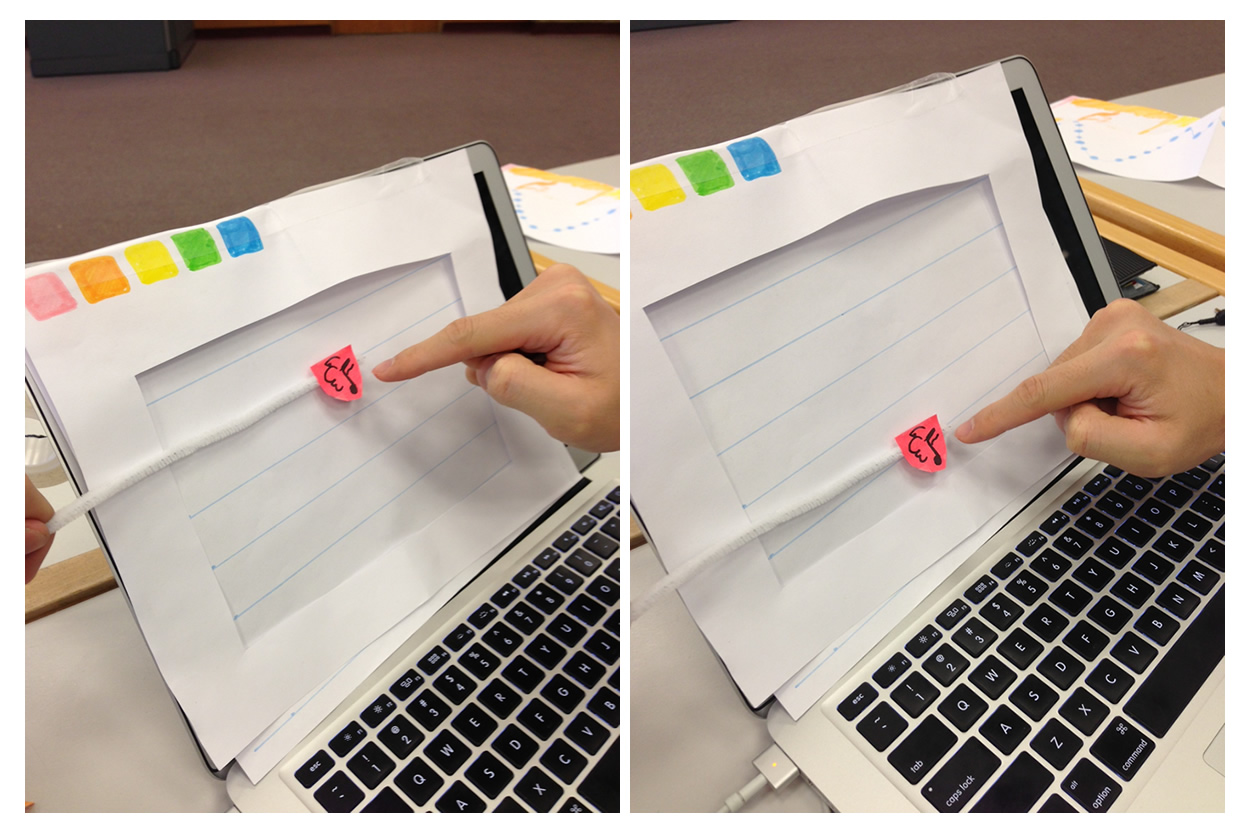

Photo: Wizard props

1. Jason & Matthew

Jason was confused by the selecting screen. He didn't know what's the relationship between those colors, numbers and the sounds. Also he was concerned how to identify a user if there are someone else choose the same instrument as him. Matthew thought the control was thumbs up according to the instruction page. As we only have three people, two as sound Wizard and one as visual Wizard for both users, this test is a bit messy.

2. A member from conduction team

Moving left/right to change volume is confusing as he thought that moving left and right had something to do with the forward/backward for music. During the game, he pointed to the instruments to select rather than swiping. He said he forgot that swiping is to choose instruments.

3. Micheal

Instead of moving one finger up and down to adjust the pitch, Micheal was moving the whole palm back and forth / left and right. He is concerned about multiple players would end up making some annoying sound. Micheal suggested that, as for the input gesture, it should be related to how the instruments are played in reality. For string instruments like violin or more continuous sound, on/off control is more intuitive; as for percussion and beats, poking to trigger sound control makes more sense.

4. Vydia

She stopped after the instruction screen and didn't figure out how to start. She was also concerned whether it would sound good if multiple players are making sound together. She suggested to have some pre-determined music so that when user move along the pre-determined route they will create legitimate music. Using some familiar piece of music, like jinglel bell, may make it easier to play.

Problems found in test:

- Users' biggest control is whether it will create legitimate music when played by multiple people.

- Most users are confused or hesitate about how to start the game or what they are supposed to do when the game starts.

- Most people didn't figure out that, during the game, they can change instrument by swiping left/right.

- Users might be confused by the relationship between color and sound when choosing it.

- Moving left/right to control volume is not intuitive.

- Associate each color and sound with an instrument to make it more clear when choosing an instrument. If we are simulating real instruments' sound, this will be a good idea. But currently our sound are just digital sound, and each color and sound is designed to be related to emotion. And we think Jason's confusion partially results from that he didn't hear the preview music. So to make this part clear, we'll improve the preview music and visualization for each sound.

- Mark chord with another color.

This is a good idea to guide user generate more harmonious sound, but the challenge here is how to design the gesture or mechanism to control each note in the same chord. It might be possible to use one finger for each note, or each user only control one note. We look into this further. - Control volume by moving forward and backward

- Design the gesture of triggering sound according to the sound it self. If it's a continuous sound, use on/off gesture; if it's more like sound of a percussion, poking is better. Good suggestion!

- Have a pre-determined route for a legitimate music

This will make it more likely for users to generate legitimate music, but the trade-off here is that user loose the freedom to improvise and communicate their own feeling through music. Although this might deviate from our solution goal of provide a way to communicating through music, it may still be an interesting product to make patients feel happy. To saved them from loneliness. The challenge here is how to make it more socialized.

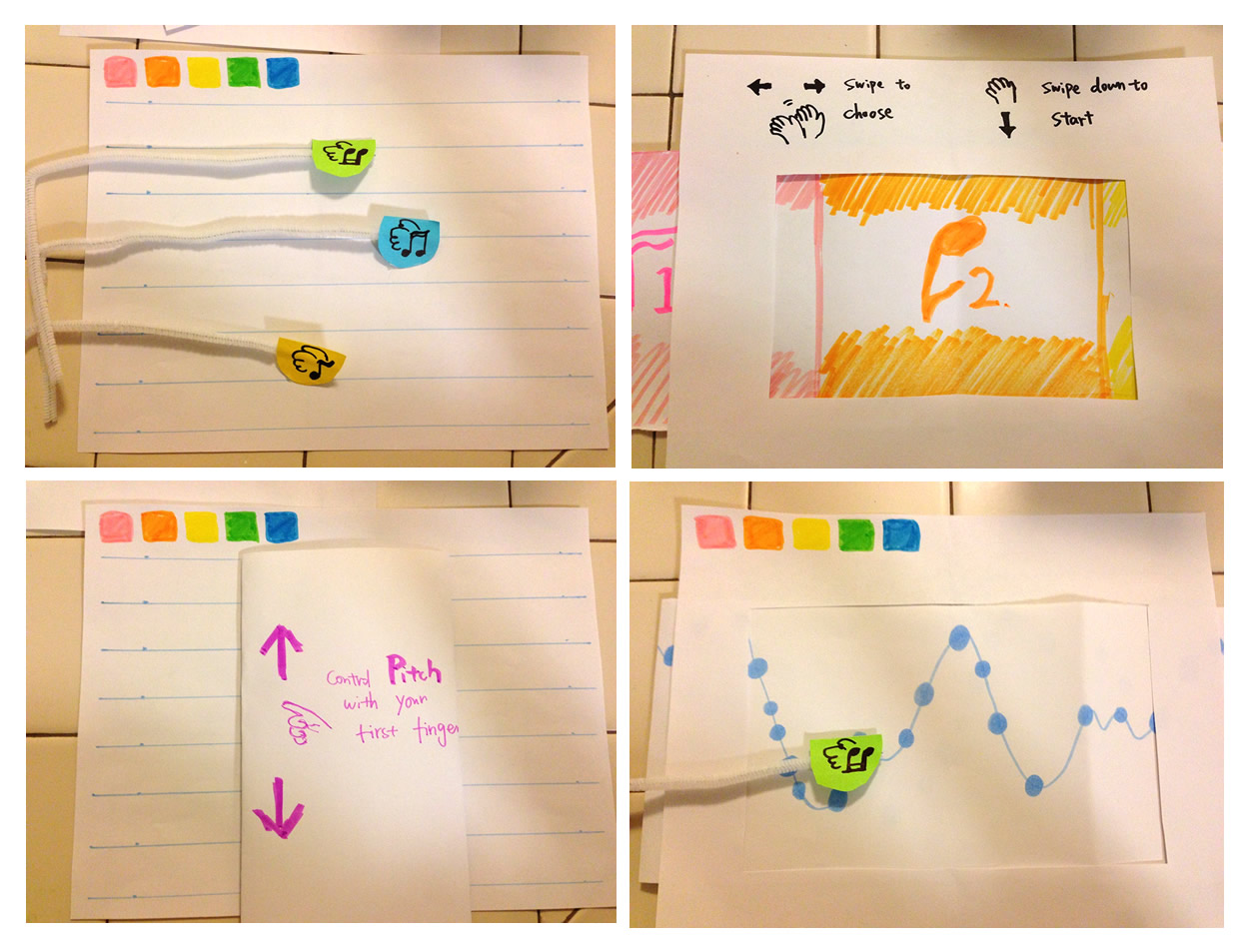

Prototype 2

We build another interface based on the suggestion to have pre-determined music. The wave is indicating a "right" way for user to produce music, i.e. if user moves her finger up and down according to the wave, a legitimate music will be generated. The dots on the wave indicate beats. We want to test whether it is easier for this prototype to interact with the music and react according to the music response. We also want to find out if the user feels they are able to express their feeling through such type of interaction.

We tested both prototype on a user.

- For prototype 1, she showed similar breakdowns as other testers in studio: hesitated about how to start to play; use palm to control and move randomly around

- For prototype 2, when the wave coming in and moving forwards, she intuitively figured out to start playing by moving her finger according to the shown route. This might partially caused by her previous experience of prototype 1, but obviously this time she is much less hesitating. Besides, she spontaneously poke when she saw the dots on the wave.

Prototype 1 sounds more fun but it's hard to create good and might not keep user playing for a long time. Prototype 2 is more clear but it's similar to Apps like GuitarHero. She suggested an interesting idea : have one note of the chord determined and played by computer, let user improves on other notes of a chord. This may help to make more legitimate music while keeping the improvisation feature.

Conclusion

Based on our observations, suggestions, and discussion above. We believe that our prototype 1's interface will serve our purpose beffer as it allows more freedom for the user to express themselves and also make lighter load of cognitive tasks to follow a music pattern in our prototype 2. We will revise our prototype 1 by adding more visual cues and better visualization to provide feedback to the user. We'll also focus on how to achieve enjoyable sound from improvisation so that our application does not output random noises.